|

[Courtesy of Soul Machines] |

<이미지를 클릭하시면 크게 보실 수 있습니다> |

Artificial intelligence (AI) technology is evolving beyond simple text processing to handle various forms of data, including voice, images, and videos, through the advancement of multi-modal capabilities.

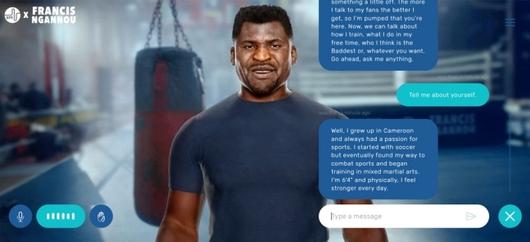

Soul Machines, a Silicon Valley startup, unveiled an avatar of the renowned mixed martial artist and professional boxer Francis Ngannou in October.

By creating an avatar closely resembling Ngannou and allowing ChatGPT to learn information and speech patterns related to Ngannou, the digital human is capable of responding in English.

The immersion is heightened as the avatar blinks and moves its lips, simulating a conversation with Ngannou.

This development is made possible as ChatGPT can understand voice as text and generate text that can be converted back into speech.

The sharing of methods to learn English conversation using ChatGPT is already prevalent on the internet.

Traditional expensive lessons for native speaker phone and video English classes may be replaced by AI, industry insiders noted.

Multi-modal large language models (LLMs) could replace human tasks in many areas beyond traditional text-centric AI.

Jobs in service sectors, especially those relying on language skills, such as translation, language education, and customer-facing tasks, may face challenges with the advent of multi-modal AI.

While text-centric AI was limited to customer interactions via chatbots, multi-modal AI can extend its applications to phone calls and video consultations.

Another Silicon Valley startup, HeyGen, an AI video generator, recently introduced a video translation service that translates the speech of a speaker in a video into another language.

This service can significantly replace a substantial portion of the tasks traditionally carried out by interpreters. It is gaining attention especially among creators who want to create content that resonates with users of different languages while maintaining their original voice.

HeyGen’s capabilities are also possible because AI possesses multi-modal abilities. The system recognizes the voice in the video, translates it into a different language, and then vocalizes it in the learned voice tone.

As the generative AI market evolves rapidly into multi-modal capabilities, Korean companies are also working hard to expand presence in the bourgeoning sector.

Korean platform giant Naver Corp. plans to gradually expand its text-centric generative AI search service Cue: by adding multi-modal technology.

Users will be able to obtain results more quickly by entering text, images, or voice in the search box.

LG Group’s AI artist Tilda also incorporates a multi-engine that understands both language and images.

Based on LG AI Research’s super-giant multi-modal AI EXAONE, Tilda can not only draw text as images but also engage in bidirectional communication, describing images based on text.

According to global analytics firm MarketsandMarkets, the multi-modal AI market is projected to grow at an annual rate of 35 percent to $4.5 billion in 2028 from $1 billion in 2023.

이 기사의 카테고리는 언론사의 분류를 따릅니다.

기사가 속한 카테고리는 언론사가 분류합니다.

언론사는 한 기사를 두 개 이상의 카테고리로 분류할 수 있습니다.

언론사는 한 기사를 두 개 이상의 카테고리로 분류할 수 있습니다.